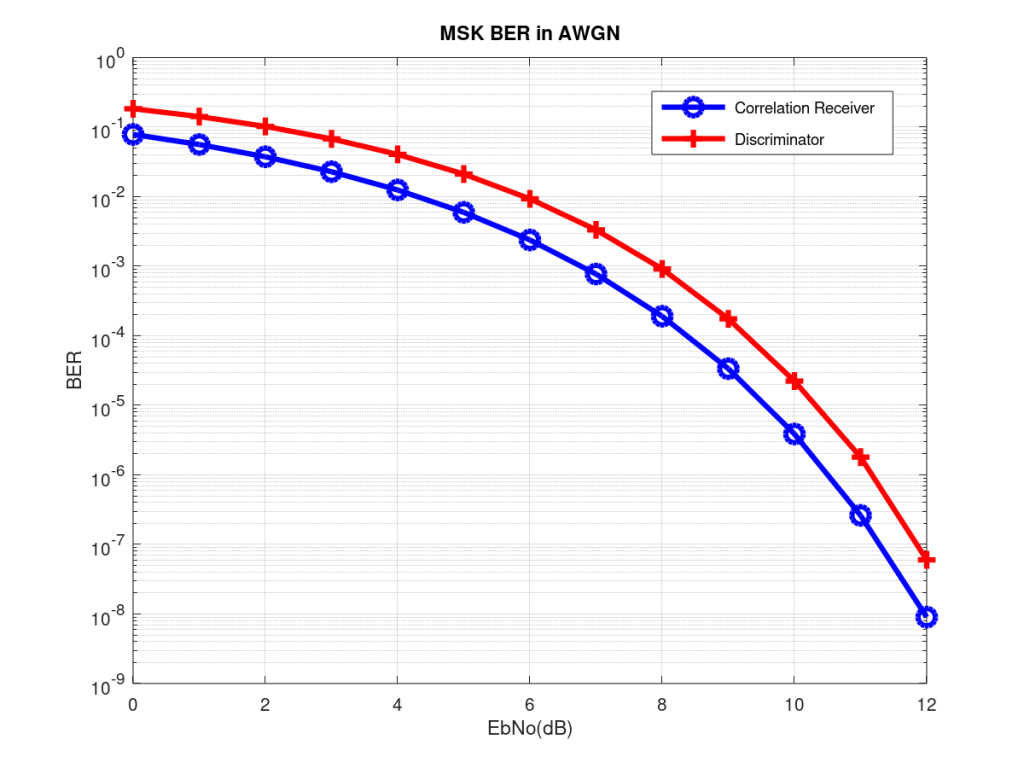

It is widely believed that performance of non-coherent receivers is much worse than performance of coherent receivers in terms of Bit Error Rate (BER). Although this is true to some extent but as we show in this post the difference in performance is not that much in case of Minimum Shift Keying (MSK). In fact, there is only a difference of about one dB in an AWGN environment at high Signal to Noise Ratios (SNR). The difference is somewhat larger in flat fading environment but given the simplicity of implementation of a non-coherent receiver the trade-off might be worth it.

Given below is the Octave code and simulation results of a discriminator-based MSK receiver architecture in an AWGN environment. It is seen that at low SNR the difference in performance is about 2dB but this reduces to less than a dB in the high SNR region (there is a slight difference in how EbNo and SNR is defined but we use it interchangeably). The difference in performance in a flat fading environment is about 3-4 dB, keeping all other variables to be the same. It must also be noted that we are using one sample per symbol, the results change somewhat if we increase the number of samples per symbol.

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% MINIMUM SHIFT KEYING %

% %

% BER OF MSK IN AWGN %

% WITH DISCRIMINATOR DETECTOR %

% %

% www.raymaps.com %

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

clear all

close all

no_of_bits=1e7;

Eb=1;

EbNodB=10;

% MODULATION

bits_in=round(rand(1,no_of_bits));

bipolar_symbols=bits_in*2-1;

commulative_phase=(pi/2)*cumsum(bipolar_symbols);

tx_signal=exp(i*commulative_phase);

% AWGN CHANNEL

EbNo=10^(EbNodB/10);

sigma=sqrt(Eb/(2*EbNo));

AWGN_noise=randn(1,no_of_bits)+i*randn(1,no_of_bits);

rx_signal=tx_signal+sigma*AWGN_noise;

% DEMODULATION

complex_rotation=([rx_signal, 1]).*conj([1, rx_signal]);

angle_increment=arg(complex_rotation(1:end-1));

detected_symbol=sign(angle_increment);

bits_out=detected_symbol*0.5+0.5;

% BER CALCULATION

ber_simulated=sum(bits_out!=bits_in)/no_of_bits;

ber_theoretical=0.5*erfc(sqrt(EbNo));

Note:

1. Discriminator detector just outputs a +1 if the phase is increasing and outputs a -1 if the phase is decreasing.

2. In the code above we first find the advance of the exponential over the symbol period and then find the angle incremented. But discriminator can also be implemented by first finding the phase of the exponential and then taking a time derivative. Results remain exactly the same.

3. The results are much worse if we do over-sampling i.e. if we increase the number of samples per symbol. This is not totally understood at the moment and will be subject of a future post.

4. One advantage of using non-coherent receiver architecture is that you do not require carrier phase synchronization.

7 thoughts on “MSK Demodulation Using a Discriminator”

I have observed the same deviation from theoertically predicted BER performance in my own multiple sample per symbol simulations (in AWGN). I was wondering if you have come across either an explanation or other resolution to this issue?

Hi Paul,

Great question. I am just thinking that maybe we are losing something in simple averaging. Did you try using some other type of filter?

Yasir

John: Thank you for replying so quickly!

I’m not sure what you mean by “filter”.

I’ve tried a few different (non-coherent) demodulator constructions …

and I’m currently using a (textbook) correlator method:

comparing the real + imag squared magnitudes of the correlations between the received signal and the two “carrier tones”.

At 10 dB Eb/No, the simulation result is about 1 dB worse than the non-coherent theoretic expression (running 16-million-bit long simulations per Eb/No value). The correlation calculations are performed perfectly over each symbol duration (i.e., no symbol timing errors).

In my current application, this may not be a significant concern, as I expect the (effective) Eb/No of the operational environment to be significantly less than 10 dB. But I do find it worrisome when I can’t match theoretical results in a “pristine” simulation environment.

I mean that when you take the difference, you just do not sum or average the values. You apply a filter like an FIR filter. Summation is a very simple filter.

I hope that makes sense!

Ah, so I believe you mean “filter” in the sense of an “Integrate & Dump” filter. Since the (noiseless) phase slope vs. time is a constant over the symbol period, I would think an (unshaped) I&D is the optimal “matched filter” (in the phase domain). Perhaps I need to try “my” non-coherent demodulator on other frequency-modulated waveforms (for which theoretical expressions exist). Given your post and textbook comments on the subject, I was hoping that you might have made some progress I could “leverage” to reduce my effort. But thank you for the conversation anyway … it does stimulate further thought.

So Integrate and Dump is the optimal filter, interesting. I think you may be right.

So I revisited my simulations from a few years back and found the same result. BER results do degrade with increasing number of samples per symbol. This is because I increase the noise power when increasing the number of samples per symbol to keep the comparison fair. However, if I do not do this, keep Eb=Es=constant then the results are very close to the correlation receiver results (only about 1dB worse). But this obviously is wrong!